This is a selected part of a technical audit I've conducted as a freelancer for an agency - Hop Online.

The main goal was to allow for more frequent crawling of the valuable product pages and to come up with a strategy that satisfies the business needs, as well.

[Site] Technical Audit

This document outlines the major issues found during Hop Online's technical audit of the website.

In a nutshell

Most of the severe issues found are affecting crawlability. Crawlability is the potential of your site to be visited and read by search engine bots. It is one of the fundamentals of search engine optimization.

Crawlability issues

Before Google, or any other search engine, can evaluate and then rank your website, it should be able to visit and read your pages. This process is called crawling.

Google's official documentation on crawling

There are many factors affecting crawlability. It isn't just about the ability of a URL to be visited by a search engine crawler. A very important aspect is the distribution of the so-called "crawl budget". A search engine has finite resources and computing power, so a site should be easy to navigate through. An abundance of similar or low-value pages, redirected and 404d URLs, and more all affect crawlability negatively.

Public scorecards directory

https://securityscorecard.com/security-rating/

Issue 1: Too many pages, very little traffic

Your /security-rating/ directory contains around 5,780 pages, according to our Screaming Frog crawl. You have reported that the number is much bigger. Just around 70 of them receive any organic traffic. This means a lot of the crawl budget is being spent on pages that do not receive traffic.

You have to consider how important these pages are to you and what is the goal you are trying to achieve with them. This is a business decision, without which we can't provide one SEO solution.

Option 1: Move all pages, improve the content on them

You could follow something similar to the TrustPilot model and establish yourselves as the go-to platform to check the cybersecurity score of a website.

This would require:

- Moving the pages to a separate domain, so you don't exhaust the crawl budget of your product site. With so many /security-rating/ pages, your core product pages are not getting enough crawling resources. Also, the intent of these pages is quite different and requires different optimization.

- Improving the content on these pages so they provide value to users. This way you can establish yourselves as an authority.

Your main product site would still benefit from this separate domain as you will keep the branding and will also have internal links pointing to it.

The biggest problem with this solution is how much time and resources it will require from your team, as this is a huge project.

Option 2: Offer Scorecards as a service only, noindex the pages

You could follow something similar to the DMCA model. One can log in, request a scorecard and also browse other scorecards (behind the login portal). Without logging in, a user can search for a domain and see a yes/no result if the domain already has a scorecard or not. If yes, there's a message to log in and claim it, if not, there's a message to log in and request a scorecard. Scorecards can be then embedded as a badge on the clients' websites and can link to the scorecard page, which will have a noindex tag.

This would require a complete change of the current logic of this portal and a lot of dev involvement.

🧐 Simply putting "noindex" pages doesn't solve the issue with extensive crawling of pages. This is why this solution is utilizing search pages (search requires user interaction, Google can't do this, so it wouldn't have so many paths to reach these pages) and login portals. This will reduce the internal links to these pages. On the other side the "noindex" will assure that this is not low quality content that you're trying to rank.

Option 3: Reduce the number of indexable Scorecard pages

You will have the Top 10, or even a Top 100, page with your most important and popular Scorecards. These will be indexable. But the page with "All scorecards" should be turned into a search page. The user can search for a domain and a result will appear with the scorecard. These scorecards will all be "noindex".

This would allow you to have internal links to the most important pages that might be bringing traffic and to remove the internal links to the thousands of other pages that don't need to be crawled and indexed.

This would require some changes in the current setup of the portal and dev involvement.

Issue 2: URL path inconsistencies

This is a small issue, but have a look at this URL: https://securityscorecard.com/security-rating/companies/technology

If you try to go to just https://securityscorecard.com/security-rating/companies/ it will lead you to a totally different type of page. It is described in Google's documentation that directories in URLs are important for crawling patterns.

Solution: Remove the /companies/ part of the URL path

Issue 3: Industry category pages pagination creating a lot of URLs

Let's take category technology for example. We have:

- https://securityscorecard.com/security-rating/companies/technology → home of the category

- https://securityscorecard.com/security-rating/companies/technology/full-list#all → home of the paginated list

- https://securityscorecard.com/security-rating/companies/technology/full-list?page=2#all → second page of the paginated list (these can be hundreds)

This repeats for every category and you can imagine this adds tons of URLs without much value, which are costly for the crawl budget.

Solution 1: Have just one paginated page

Keep only the main category pages for companies and add filters to this page → https://securityscorecard.com/security-rating/companies/all-industries/full-list#all

If this page has filters, then users can still search by industry. At the same time, we can block the filter parameter in a robots.txt file, so these pages aren't heavy for the crawl budget.

Solution 2: Disallow paginated pages for this directory

In the robots.txt file you can disallow the following path: /all-industries/full-list?page=

This would block Googlebot from accessing these pages.

Even in this scenario, having both:

- https://securityscorecard.com/security-rating/companies/technology → home of the category

- https://securityscorecard.com/security-rating/companies/technology/full-list#all → home of the paginated list

is not optimal, because they have the same intent, so they have to be consolidated. Otherwise they will be considered duplicates. They even have the same titles and H1s:

Partner locator directory

SecurityScorecard Partner Portal | Locator

Issue 1: Thousands of accessible search parameter URLs

These links add search parameters in the URL. There are thousands of combinations, so there are thousands of URLs. The individual value of these is quite low for the search engine, because the content of the different URLs is overlapping and in terms of search potential, there aren't any people using queries such as "SecurityScorecard partners in the US which are solutions providers". Having thousands of low-value URLs is affecting crawlability in a bad way, because a lot of the crawl budget has to be wasted on processing these URLs.

Solution 1: Block the search parameters for this subdomain by robots.txt

The robots.txt file instructs bots (including search engine crawlers) which URLs they can access and which not. Blocking URL paths that are of no value saves crawlers time and resources, which opens up more room for valuable pages to be crawled.

Here's what has to be done:

- Submit a robots.txt file for https://partners.securityscorecard.com/

- Disallow *?f0=

- Disallow *?s=

🧐 We aren't disallowing just *? because the pagination is also using parameters. The pagination should be crawlable, since this is the only way Google can access the individual partner pages. Some might argue that these pages are of no value either. But this wouldn't be an issue solved by disallowing. If a page has no value or is out of context, its content or intent should be revised. You might want to think whether you actually need these pages and if yes, shouldn't they be behind a login portal.

⚠️ Use the robots.txt file with extreme caution and with the assistance of an SEO expert. Disallowing in robots.txt is used in rare cases, when nothing else can be done to fix the issue.

Solution 2: Move all partner locator pages behind a login wall

If these pages are not valuable to every user, but just the active partners, then just move them behind a login wall and disallow the whole /english/ & /English/ path in robots.txt

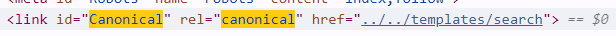

Issue 2: Partner locator pages are canonicalized to 404 pages

(only if you choose solution 1)

Google's documentation on canonicalization

A canonical relation shows which is the original URL. It is used in very specific cases where we have two extremely similar or identical pages, for example, when we are using tracking parameters when the content of the page is the same, but the URL is different.

The partner locator pages are canonicalized to 404 pages, which is incorrect. We wouldn't want to lead Googlebot to a "broken" page.

Solution: Set up self-referencing canonicals

In this case, there is no need to specify a different canonical.

🧐 This includes the paginated URLs. They should also have self-referencing canonical so the individual partner pages can be crawled without defects. Canonicalization doesn't block a URL from getting crawled, but it reduces the rate it is crawled with. If you don't want these individual pages to be crawled, there are other measures to take. As discussed in the previous section, you can rethink if you actually need these pages and if they should be moved behind a login portal. If you keep them as they are, you can reduce the crawl rate by balancing out the number of internal links to them. This is the clean way to do it.

Trailing slashes

Issue 1: Inconsistencies in with/without trailing slash canonical URLs

Look at these URLs:

- https://securityscorecard.com/security-rating

- https://securityscorecard.com/platform/security-ratings/

As you can see, one of them has a trailing slash and the other one doesn't. You should decide on a trailing slash logic to follow consistently. Most of the indexable URLs on your site (excluding the /security-rating/ folder) have a trailing slash, so we recommend following this logic.

Consistency is important so you can set canonical attribute rules and redirect rules.

Solution: Set a standard that all canonical URLs should be with a trailing slash.

Issue 2: URLs with misplaced trailing slash don't redirect

If you try to access this page → https://securityscorecard.com/why-securityscorecard/customer-stories, you will see that it is possible. There is a canonical in place, signaling that this is not the original version of the page.

Even though this is not a huge issue, it might lead to confusion. You might get a lot of links to a non-canonical version of the URL and it might get indexed. Also, now you have a lot of non-canonical URLs getting crawled, and just like we mentioned in the beginning - we don't want you to waste your crawl budget.

Solution: Set up a redirect rule consistent with your trailing slash logic

E.g https://securityscorecard.com/why-securityscorecard/customer-stories should redirect to -> https://securityscorecard.com/why-securityscorecard/customer-stories/

🧐 Keep in mind that in the beginning this will cause a bit of confusion to Google. There will be quite a few redirects and also the canonicals recorded would no longer be canonical. But in the end you will have a clean website with a clear architecture.